home

Corduroy's Link Hoarding Cupboard

Table of Contents

What is this page?

Welcome to my humble abode. I've become something of an online trashman over the past few years -- I often stumble upon information that I don't want to lose, so I organize it here for my convenience (and maybe yours too, dig around maybe you'll find something cool). This is also a place to commemorate people who I admire. I like to humanize the gurus and legendary personas that I read and hear about. I find that birthdays help with this -- did you know that Ken Thompson and I almost share a birthday!? Have a good stay.

The history and people of computing

Much like everything else in life, computer science didn't appear out of thin air. I seldom come across anyone interested in the culture and history of computing and its subgroups, which is sad and kind of strange. The public view of CS seems to paint it as an ultra-practical skillset and a pathway to economic success. Perhaps this is a symptom of the divide between STEM and the humanities, or the inevitable side effect of Late Capitalism. Either way, to the layman, this rich history is virtually unknown, and computer science seems to be boiled down to the technical sum of its parts.

With that in mind, here's a list of people worth knowing (in no particular order):

Richard Stallman (rms), Eric S. Raymond (esr), Linus Torvalds, Jon Hall (maddog), Dennis M. Ritchie (dmr), Ken L. Thompson (ken), Brian W. Kernighan (bwk), Donald Knuth (dek), John Von Neumann, John G. Kemeny, Alan Turing, Ada Lovelace, Charles Babbage, Tim Berners-Lee, Doug Engelbart, Edsger Dijkstra, John McCarthy, Aaron Swartz, Terry Davis, Joe Armstrong, Francesco Vianello, Richard Feynman, Leonard Susskind, Isaac Newton, Gottfried Wilhelm Leibniz, Leonhard Euler, George Boole, Paul Erdös, Vint Cerf

This list is severely incomplete.

Great reads

* In the Beginning was the Command Line

* A history of the Amiga (parts 1 - 12) (1) (2) (3) (4) (5) (6) (7) (8) (9) (10) (11) (12)

* Everything on catb.org

* Learn about Free Software from Stallman, here

* Why Erlang is the only true computer language

* The Lesson of Grace in Teaching

* Gödel, Escher, Bach: an Eternal Golden Braid

* Hackers: Heroes of the Computer Revolution

* Diligence, Patience, and Humility by Larry Wall

* The Humble Programmer by Edsger W. Dijkstra

* Thoughts on Glitch Art by Nick Briz

* A series on parsing by Jeffrey Kegler (1) (2)

* Scribe: A Document Specification Language and its Compiler

* The Meme Hustler (an essay on so many things, some of which I disagree with)

* Hackers and Painters by Paul Graham

* What Makes History? By Michael S. Mahoney

* The Eternal Mainframe by Rudolf Winestock

* The Web of Alexandria (1) and (2) by Bret Victor

+ Related: 'Prints' by Rob Pike

* The Rise of "Worse is Better" by Richard Gabriel

* The future of software, the end of apps, and why UX designers should care about type theory by Paul Chiusano

* The Emperor's Old Clothes by C.A.R. Hoare

* Semantic Compression by Casey Muratori.

Favorite videos

* Python as C++’s Limiting Case, by Brandon Rhodes

* The Mess We're In, by Joe Armstrong

* Terry Davis's McDonald's Interview

* Brian Kernighan Interviews Ken Thompson

* DNA: The Code of Life by Bert Hubert

* Light Years Ahead | The 1969 Apollo Guidance Computer

* Escape from the ivory tower: the Haskell journey

* Steve Wozniak at "Intertwingled" Festival, 2014

* Ted Nelson's Eulogy for Douglas Engelbart

* Ted Nelson Understands Computers

* Jaron Lanier at "Intertwingled" festival, 2014

* Network Literacy by Howard Rheingold

Birthdays!

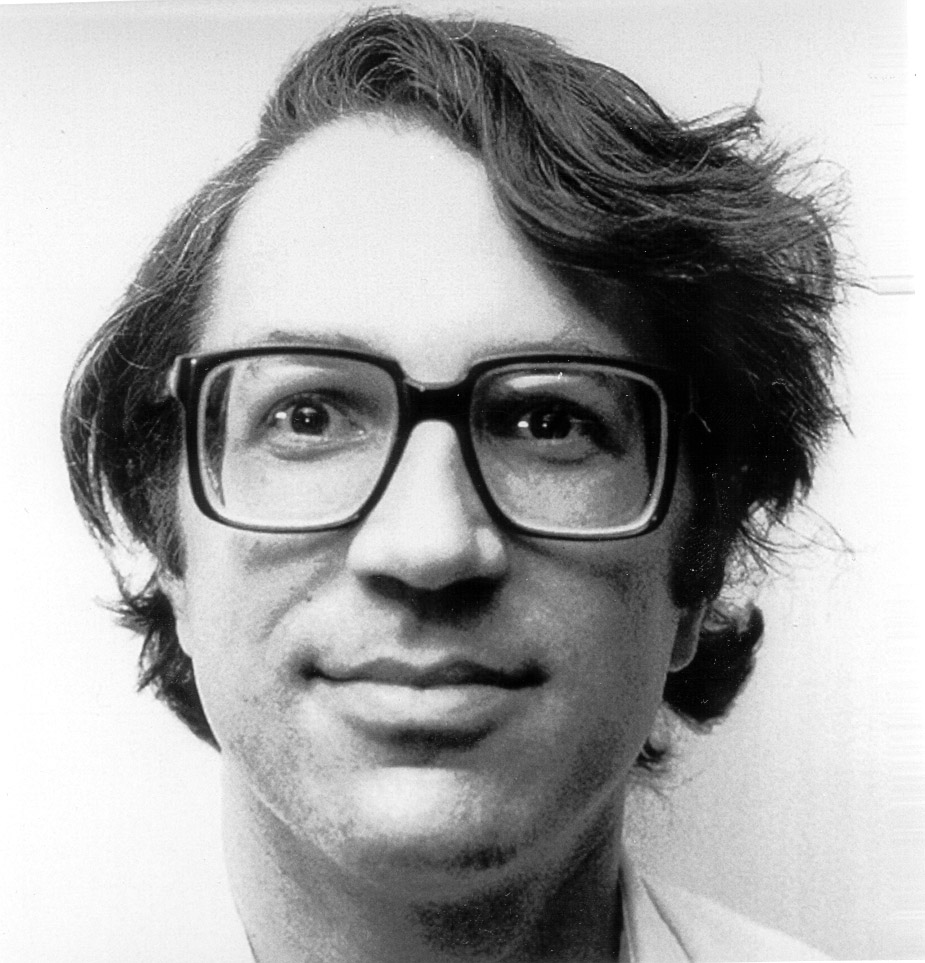

Richard Stallman

March 16th, 1953

Ken Thomspon

February 4th, 1943

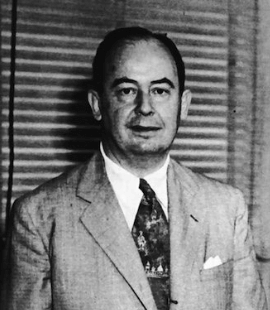

John Von Neumann

December 28th, 1903

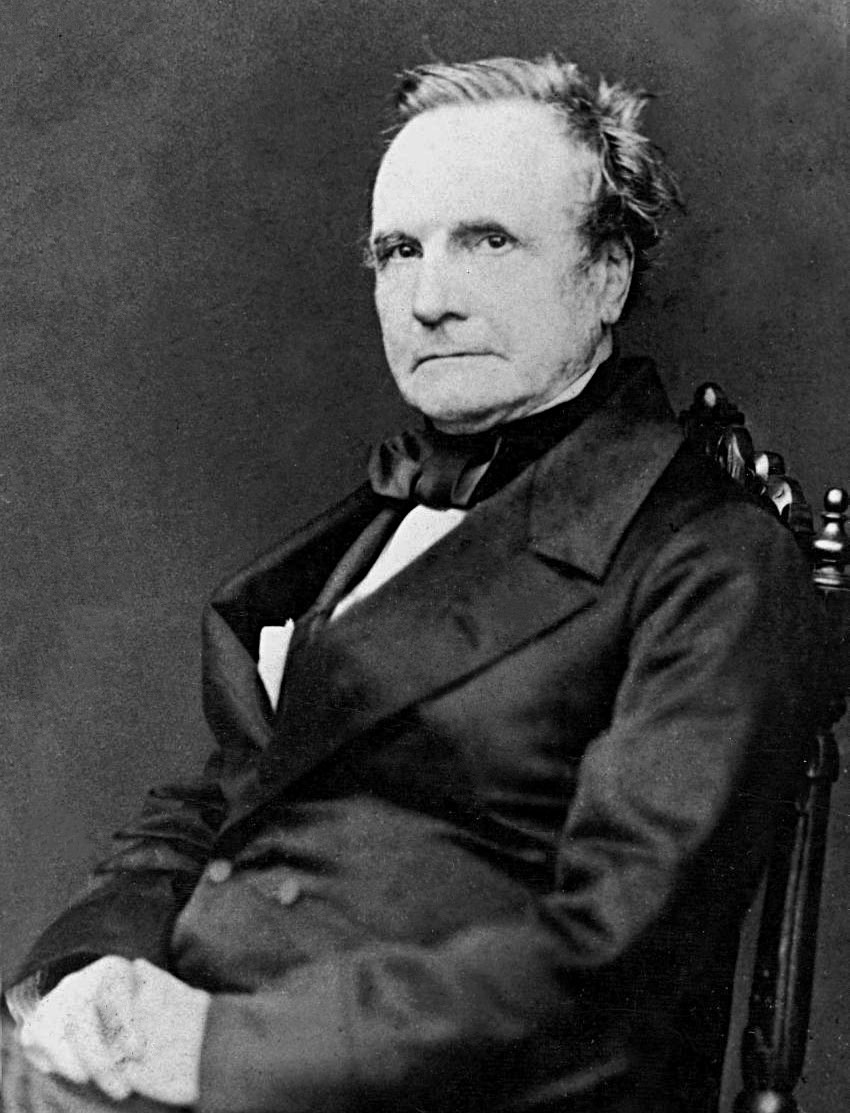

Charles Babbage

December 26th, 1791

Kurt Gödel

April 28th, 1906

Slavoj Žižek

March 21st, 1949

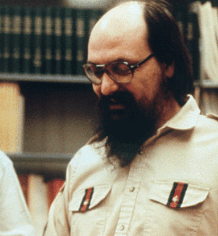

Eric Raymond

December 4th, 1957

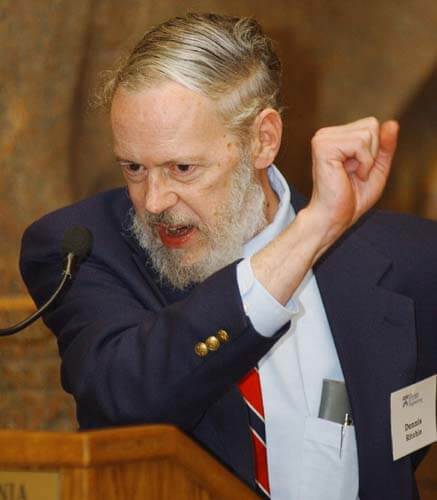

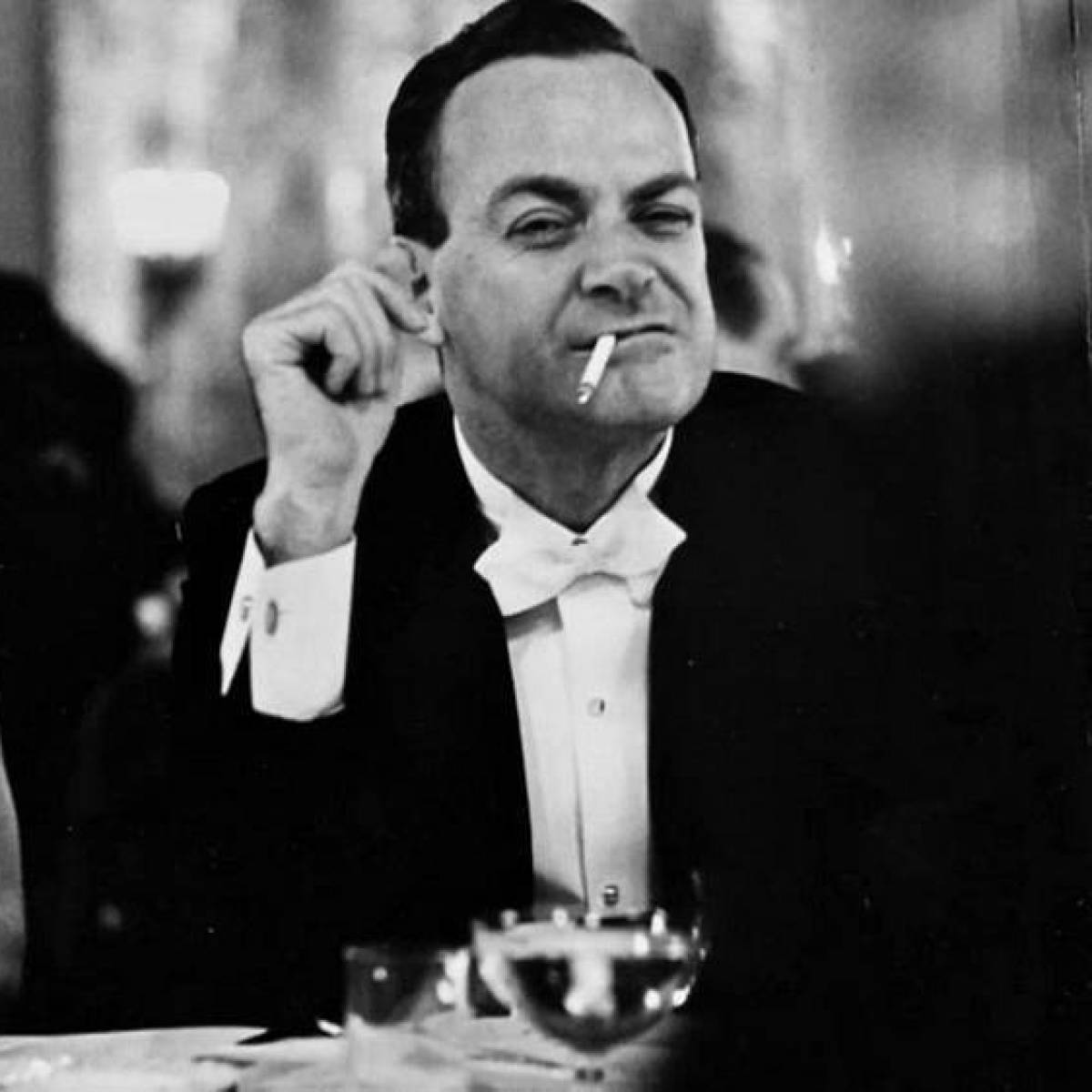

Dennis Ritchie

September 9th, 1941

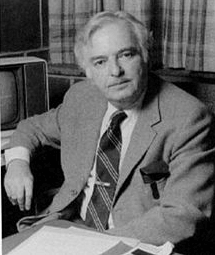

John G. Kemeny

May 31st, 1926

Augusta Ada King, Countess of Lovelace

December 10th, 1815

Maurits Cornelis Escher

June 17th, 1898

Richard Feynman

May 11th, 1918

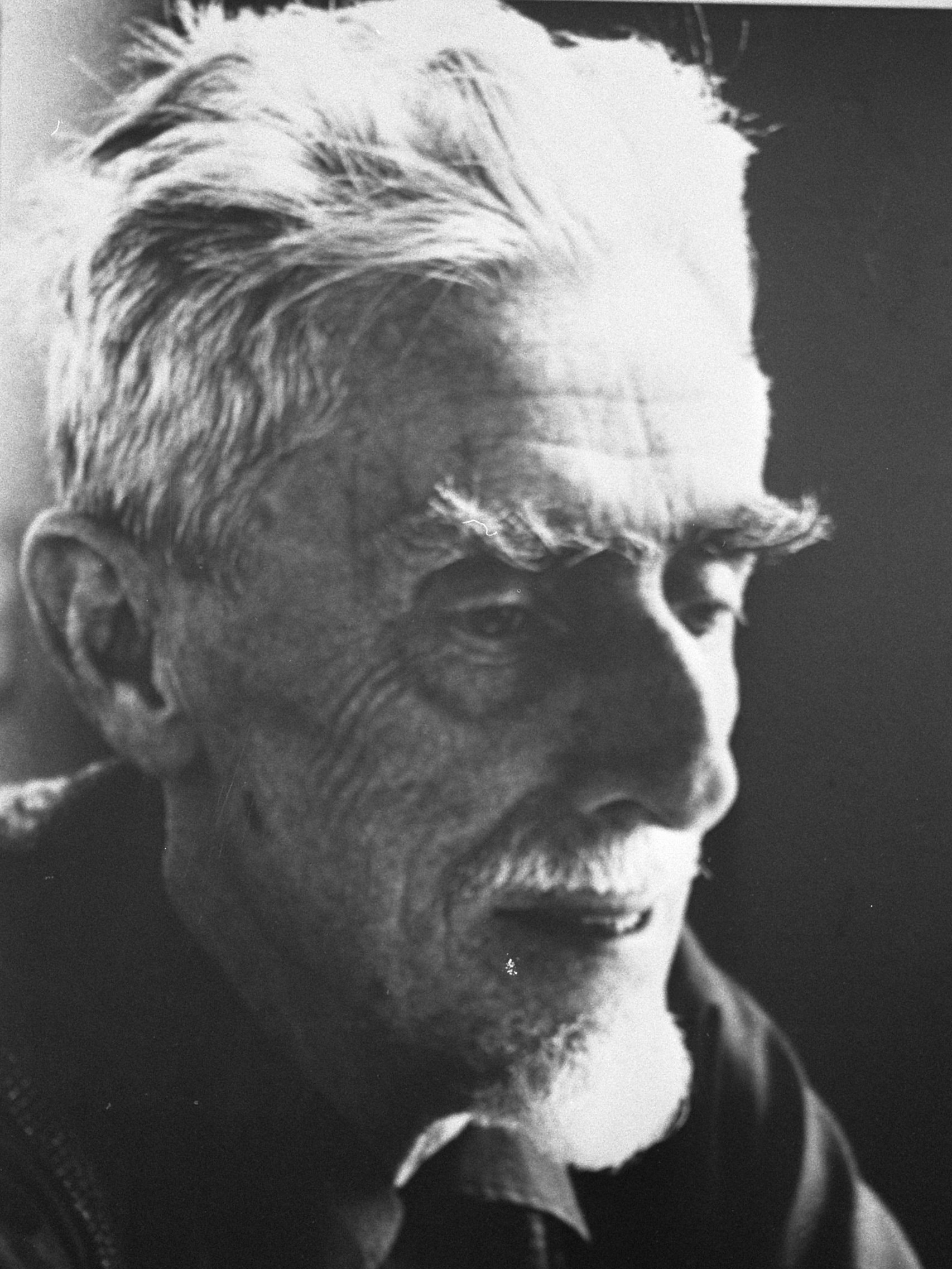

Jon Hall

August 7th, 1950

Brian Kernighan

January 1st, 1942

Alan Turing

June 23rd, 1912

Joseph Marie Jacquard

July 7th, 1752

Johann Sebastian Bach

March 31st, 1685

Larry Wall

September 27th, 1954

Kool Links

Computer-related

* Javidx9, a friendly computer wizard

* The Cherno Project's C++ Tutorials

* Harvard CS50 Intro To Computer Science Videos

* Stanford Computer Science Nifty Assignments

* Functional Programming in OCaml

* Computer Science from the Bottom Up

* +ORC

* DaedTech (Existentialist Programmer Thoughts)

* Reflections on Trusting Trust

* Nils M Holm's Bits and Pieces

* Handmade Hero (click this for some context)

* Moss (Measure Of Software Similarity)

* Find LaTeX Symbol's Code by Drawing It

* The Whitney Museum Portal to Net Art

* WikiWikiWeb, the original Wiki

* Making Homebrew PS1 Games in C

* RackAFX7, a DAW Plug-In Designer Software

* Michael Abrash’s Graphics Programming Black Book

* Carbon, Create and share beautiful images of your source code

* What every computer science major should know

* Real Programmer: The Story of Mel

* Racism is Socially Engineered Injustice

* bikeshed

* How to Report Bugs Effectively

* Richard Feynman and The Connection Machine

* The Graphing Calculator Story

* Suricrasia Online's Online Library (Meme)

* The Cursed Computer Iceberg Meme

* Kragen, Software Security Holes

Weblogs

* Steve Yegge's blog and other blog

Miscellaneous

* Professor Leonard's Math Videos

* Whitechapel: Documents of Contemporary Art

* Textures

Quotes, terms, an oxford comma, and etcetera

Playing over these moves is an eerie experience. They are not human; a grandmaster does not understand them any better than someone who has learned chess yesterday. The knights jump, the kings orbit, the sun goes down, and every move is the truth. It's like being revealed the Meaning of Life, but it's in Estonian.

Today science is biased towards rejecting any theory involving God. It is called naturalism. It proposes that any and all observable phenomena must have a natural cause. It also has the side-effect of barring God from having any influence in the world. If it appears that God has an influence in the world, a different explanation must be found. And men are masters of creating alternative explanations. It is called skepticism.

Education is an extension of the human instinct to reproduce. One of the things we should be doing is to purposely invent better ways to think, and then figure out how to teach them to children to create much more able adults than we are.

Anyone can cook, but only the fearless can be great

Hedonic treadmill

From soup to nuts

Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.

Most Non-Unix managers conclude that VI is either extraterrestrial in origin or was devised by the original Unix developers as part of a secret communications code to reach another dimension.

xyzmy@pantsexample.com -- To email me, first you have to remove my pants.

Benevolent Dictator For Life

The moral is obvious. You can't trust code that you did not totally create yourself.

im 86 years old, and, uh, im in the bathtub with two crocodiles, they're like pet crocodiles, cause real crocodiles have gone extinct, so they're like small and pink, and they're crawling all over me, im drinking nice, moroccan coffee, and im listening to old chief keef demos, and just like, beautiful, women everywhere, exotic pets, and i don't know i go down and check the mail

chrestomathy

In the 80s and 90s, engineers built complex systems by combining simple and well-understood parts. The goal of SICP was to provide the abstraction language for reasoning about such systems. Today, this is no longer the case. Engineers now routinely write code for complicated hardware that they don’t fully understand (and often can’t understand because of trade secrecy.) The same is true at the software level, since programming environments consist of gigantic libraries with enormous functionality. Programming today is “More like science. You grab this piece of library and you poke at it. You write programs that poke it and see what it does. And you say, ‘Can I tweak it to do the thing I want?'”. The “analysis-by-synthesis” view of SICP — where you build a larger system out of smaller, simple parts — became irrelevant. Nowadays, we do programming by poking.

I have no idea if other fields have this same problem — my guess is that physicists are particularly prone to it, since we are trained early on to think that physicists are simply smarter than chemists or biologists. Those other fields are for the hard workers. We don’t put mathemeticians on this scale, because we secretly believe they’re smarter than us. Note to the biologist lynch mob: tounge is in cheek.)

Enjoy your work

ex nihilo nihil fit

one should base one’s work satisfaction on realistic achievements, such as advancing the state of knowledge in one’s specialty, improving one’s understanding of a field, or communicating this understanding successfully to others, rather than basing it on exceptionally rare events, such as spectacularly solving a major open problem, or achieving major recognition from one’s peers.

There is no royal way to geometry

we should be getting as much baloney as possible out of our own sandwiches.

after cultivating the right habits around doing nothing, you can actually obtain animal consciousness where you do not desire to do things.

Everybody writes a screen editor. It's easy to do and makes them feel important. Tell them to work on something useful.

Dotfile Madness. We are no longer in control of our home directories.

What you do need is some amount of time spent on the idea that computer programs are mathematical objects which can be reasoned about mathematically. This is the part that the vast majority of people are missing nowadays, and it can be a little tricky to wrap your brain around at first.

of course it runs NetBSD

Ken Thompson was once asked what he would do differently if he were redesigning the UNIX system. His reply: "I'd spell creat with an e."

Radio on TV

C is a Spartan language, and so should your naming be. Unlike Modula-2 and Pascal programmers, C programmers do not use cute names like ThisVariableIsATemporaryCounter. A C programmer would call that variable tmp, which is much easier to write, and not the least more difficult to understand.

free software without any warranty but with best wishes

Why don't you tell us what you are attempting to accomplish and perhaps we can suggest an easier way to "skin this cat".

I find it bizarre that people use the term "coding" to mean programming. For decades, we used the word "coding" for the work of low-level staff in a business programming team. The designer would write a detailed flow chart, then the "coders" would write code to implement the flow chart. This is quite different from what we did and do in the hacker community -- with us, one person designs the program and writes its code as a single activity. When I developed GNU programs, that was programming, but it was definitely not coding. Since I don't think the recent fad for "coding" is an improvement, I have decided not to adopt it. I don't use the term "coding", except if I were talking about a business programming team which has coders.

anything that’s truly real can stand up to scrutiny

If you are anything like me, then you are not an astrophysicist.

nota bene, "note well"

Leaning toothpick syndrome

A notable group of exceptions to all the previous systems are Interactive LISP [...] and TRAC [...] Their only great drawback is that programs written in them look like King Burniburiach's letter to the Sumerians done in Babylonian cuniform!

Neo-Luddism

I never metacharacter I didn't like

Sneakernet

lots of cream, lots of sugar

People often miss this, or even deny it, but there are many examples of object-oriented programming in the kernel. Although the kernel developers may shun C++ and other explicitly object-oriented languages, thinking in terms of objects is often useful. The VFS [Virtual File System] is a good example of how to do clean and efficient OOP in C, which is a language that lacks any OOP constructs

Thompson: One day I got this idea -- pipes, essentially exactly as they are today. I put them in the operating system in an hour -- they're trivial, they really are super trivial when you've got redirecting IO like UNIX already had.

The idea was just mind blowing to us. Dennis and I came in and rewrote everything in the world -- our world, in one night. We converted everything. Mostly what we did was throw out extraneous messages. Like, sort would never say "hey I'm sorting, I'm merging, I'm doing this, I'm working on this file". All that garbage is gone -- sort would read, sort, then write. And suddenly sort was what we'd call a "filter" in that day. Then we converted everything that processed something into filters. It was massive, and just exciting.

Kernighan: And the world changed, essentially overnight.

Thompson: I've always been interested in chess, I played it when I was in 7th grade because that's when Bobby Fischer was right at his height. Bobby Fischer and I are like 10 years apart in age, except that he's dead. So, I would come home and on the cover of Life Magazine there'd be "Bobby Fischer". And here I am, exactly the same age, and what do I do? So I felt very very, I don't know, worthless. I joined the chess club and played chess in school, and was good at it, but I didn't like it. I didn't like to win -- because you feel sorry for somebody who'd take it seriously, and I didn't like to lose of course. And that cut down on my options.

In order to do evil things like convert raw bytes to floats, I chose to use the “unsafe” package, which made me feel manly, powerful, and highly supportive of private gun ownership.

A mathematician’s distance from the center of his universe is often measured by Erdős number − how many degrees of coauthorship separate him and the legendary Paul Erdős. It has been my good fortune to snag a Ritchie-Thompson number of one.

Java: the elegant simplicity of C++ and the blazing speed of Smalltalk.

Lehrer went on to describe his official response to the request to use his song: "As sole copyright owner of 'The Old Dope Peddler', I grant you motherfuckers permission to do this. Please give my regards to Mr. Chainz, or may I call him 2?"

To be “good at math” is a direct reference to the K to 12 math curriculum

0xDEADBEEF

Tautological cat is tautological

Erdős number

The majority of ordinary people want to live a peaceful life. In fact, the people who are the most determined to live a peaceful and calm life are constantly running into capitalism as an insurmountable obstacle to living it. Every single day we're pushed aside, pushed at the job, we're put under all kinds of pressure in the family and society -- anywhere we go in capitalist society, which impedes on our very modest wish to live a perfectly ordinary, non-eventful and harmonious life. Because of the pressures the crisis of capitalism puts on these people, you see that the people who are most determined to live a normal and tranquil life are pushed towards drawing more and more radical and revolutionary ideas, contrary to their wishes. And that in fact is the driving force of all revolutions.

To be a Hegelian is to have a big stomach. You've got the whole world in thought. That's how we understand someone like Zizek -- he's got a very big philosophical stomach... there's nothing he can't digest.

You are Not Expected to Understand This

‘You are not expected to understand this’ was intended as a remark in the spirit of ‘This won’t be on the exam,’ rather than as an impudent challenge.

What Are You Afraid Of? Another way of looking at it is that you’re picking a license based on what you are afraid of. All of these licenses assume you’re afraid of being sued. The MIT license is if you’re afraid no one will use your code; you’re making the licensing as short and non-intimidating as possible. The Apache License you are somewhat afraid of no one using your code, but you are also afraid of legal ambiguity and patent trolls. With the GPL licenses, you are afraid of someone else profiting from your work (and ambiguity, and patent trolls). This is a radical simplification, but if nothing else it can be a helpful framework in discussing with your attorney what license makes sense for your software.

Andy giveth, and Bill taketh away.

int risultato;

risultato = addizione(5,6);

Lorinda Cherry told me that that RTM (senior) used to test people's programs by feeding them to themselves as input, a.out < a.out. It helped cure people of the assumption that a program would only see "reasonable" inputs.

There's More Than One Way To Do It

Richard Stallman - The Last of the Hackers, he vowed to defend the principles of hackerism to the bitter end. Remained at MIT until there was no one to eat Chinese food with.

Shaving off an instruction or two was almost an obsession with them. McCarthy compared these students to ski bums. They got the same kind of primal thrill from “maximizing code” as fanatic skiers got from swooshing frantically down a hill. So the practice of taking a computer program and trying to cut off instructions without affecting the outcome came to be called “program bumming"

To err is human to forgive divine.

Nothing further

The Useless Use of Cat Award

Helen: As you know, god hates brute force

The sonavabich didn't study astronomy

Tesla doesn't have a cafeteria

Unix herder

enough _ to be dangerous

Enjoy every sandwich

The hacker ethic that played such a large part in advancing computer science, building gcc, building Linux, indeed building the world's computer systems and engineering the biggest peaceful economic boom in history, is more than just a thirst for knowledge about computers. It's the obsessive belief that knowledge exists to be shared, that helping someone by making their computer run better (or their air conditioner) is one of life's joys, and that the rules that prevent sharing and helping exist to be broken. [...] The hero is the one who knows how to fix things, and fixes them -- despite not being "authorized." The evil is the paperwork we construct around ourselves, the forms and regulations that take the place of people freely helping each other.

Next time your boss comes to you and asks "Can't you just...?" Stop. Think about what he just asked. Your boss is managing complexity and he doesn't even know it, and he's just described the interface he wants. Before you dismiss him as asking for the impossible, at least consider whether or not you could arrange things so that it looks like you're doing the really simple thing he's asking for, rather than making it obvious to all your users that you're doing the really complex thing that you have to do to achieve what he asked for. You know that's what you're doing, but you don't have to share your pain with people who don't know or care about the underlying complexity.

The fact is, your brain is built to do Perl programming. You have a deep desire to turn the complex into the simple, and Perl is just another tool to help you do that--just as I am using English right now to try to simplify reality. I can use English for that because English is a mess. This is important, and a little hard to understand. English is useful because it's a mess. Since English is a mess, it maps well onto the problem space, which is also a mess, which we call reality. Similarly, Perl was designed to be a mess (though in the nicest of possible ways).

Our variable and function naming convention is to match the surrounding code. For example, if you see that variables use a CamelCase style, match that. If they use underscores, or are lowercase, match that. Readability and consistency within a section of code is of greater importance than universal consistency.

Home is a place where you are without having to justify why you're there.

If you are writing a script that is more than 100 lines long, you should probably be writing it in Python instead. Bear in mind that scripts grow. Rewrite your script in another language early to avoid a time-consuming rewrite at a later date.

This code is MIT Licensed. Do stuff with it.

Just some dotfiles son

** auuuuugggghhhhhh **

Oh bother and blast, I am mere version 3 compiler and cannot see into the future.

You have given me a version 5 program. This means my time on earth has come.

You will have to kill me. You will uninstall me, and install a version five compiler. I will be no more. I will cease to exist.

Goodbye old friend.

I have a headache. I'm going to have a rest...

**

Prison mellowed him wonderfully...Suffering either embitters you or, mercifully, ennobles you

Zettelkasten

не болтай ногами

I couldn't really learn Erlang, 'cos it didn't exist, so I invented it

I hate complexity. I tolerate it when there is no other way, but as my math kids say, if you have the right answer, it is beautiful and simple. Complex is reserved for when you haven't figured it out yet.

You must speak the native language of the community you want to be part of. If you deride their local language and proselytize Esperanto, the natives may not take kindly to you!

Finally, although the subject is not a pleasant one, I must mention PL/1, a programming language for which the defining documentation is of a frightening size and complexity. Using PL/1 must be like flying a plane with 7000 buttons, switches and handles to manipulate in the cockpit. I absolutely fail to see how we can keep our growing programs firmly within our intellectual grip when by its sheer baroqueness the programming language —our basic tool, mind you!— already escapes our intellectual control.

Argument three is based on the constructive approach to the problem of program correctness. Today a usual technique is to make a program and then to test it. But: program testing can be a very effective way to show the presence of bugs, but is hopelessly inadequate for showing their absence. The only effective way to raise the confidence level of a program significantly is to give a convincing proof of its correctness. But one should not first make the program and then prove its correctness, because then the requirement of providing the proof would only increase the poor programmer’s burden. On the contrary: the programmer should let correctness proof and program grow hand in hand. Argument three is essentially based on the following observation. If one first asks oneself what the structure of a convincing proof would be and, having found this, then constructs a program satisfying this proof’s requirements, then these correctness concerns turn out to be a very effective heuristic guidance. By definition this approach is only applicable when we restrict ourselves to intellectually manageable programs, but it provides us with effective means for finding a satisfactory one among these.

We all know that the only mental tool by means of which a very finite piece of reasoning can cover a myriad cases is called “abstraction”; as a result the effective exploitation of his powers of abstraction must be regarded as one of the most vital activities of a competent programmer. In this connection it might be worth-while to point out that the purpose of abstracting is not to be vague, but to create a new semantic level in which one can be absolutely precise.

The competent programmer is fully aware of the strictly limited size of his own skull; therefore he approaches the programming task in full humility, and among other things he avoids clever tricks like the plague.

Remember when, on the Internet, nobody cared that you were a dog?

In theory, you eschew ornamentation

C++ is several different languages in one compiler. You can use it as a stricter C, as a C with some syntactic support of ADTs, as C++98-style OO, or as a C++17 style meta-programming system. And I’ve probably missed a few. The resulting complexity requires a lot of discipline to use successfully, especially in a large team.

RIPJSB

“understanding hardware” was akin to fathoming the Tao of physical nature.)

Kotok was not the only one preparing for the arrival of the PDP-1. Like a motley collection of expectant parents, other hackers were busily weaving software booties and blankets for the new baby coming into the family, so this heralded heir to the computing throne would be welcome as soon as it was delivered in late September.

weapons or tools that aren’t very trustworthy are held in very low esteem -— people really like to be able to trust their tools and weapons.

The man of the future. Hands on a keyboard, eyes on a CRT, in touch with the body of information and thought that the world had been storing since history began. It would all be accessible to Computational Man.

During the 1970s, when structured programming was introduced, Harlan Mills pointed out that the programming team should be organized like a surgical team--one surgeon and his or her assistants, not like a hog butchering team--give everybody an axe and let them chop away.

I am as proud as a mother hen

Open Source Won the Battle, But Lost the War... a larger share of computing is performed by fixed function products, where the ability to modify the product is not part of the value proposition for the customer. Now ironically, open source has flourished in these fixed function devices, but more often than not, the benefits of those freedoms being realized more by those making the products rather than end users (which actually was true of the software market even back then: Microsoft was a big consumer of open source software, but their customers were not). Similarly, one could argue that open source has struggled more in the general purpose desktop space than anywhere else, but as the web and cloud computing has grown, desktop computing has increasingly been used for a narrower purpose (primarily running a browser), with the remaining functions running in the cloud (ironically, primarily on open source platforms). In short: open source does really own the general purpose computing space, but the market has become more sophisticated.

Работаете братья

standard hackerese pejorative

But to Gosper, LIFE was much more than your normal hack. He saw it as a way to “basically do science in a new universe where all the smart guys haven’t already nixed you out two or three hundred years ago. It’s your life story if you’re a mathematician: every time you discover something neat, you discover that Gauss or Newton knew it in his crib. With LIFE you’re the first guy there, and there’s always fun stuff going on.

"The lyf so short, the craft so long to lerne." - Chaucer

The original used the EBCDIC cent sign character to start and another cent sign to end the comment (i.e. programmer's two cents).

Bourne to Program, Type with Joy

PICNIC: Problem In Chair Not In Computer

ID10T

This might not seem like something that noteworthy, but I see it as the exact type of thing one would think of while taking a shower, which is why I love it.

I like to think of a cybernetic forest filled with pines and electronics where deer stroll peacefully past computers as if they were flowers with spinning blossoms.

It seems that many modern programmers prefer lasagna code to spaghetti code. I'm still debating which is worse. - Bill

Meritocracy was meant to be a satirical concept so clearly wrongheaded that nobody could think it was a good idea. There is no true meritocracy - there's only an arbitrarily chosen set of metrics that any given organisation considers to be of merit, and people who either benefit or lose out as a result of that specific choice. We don't understand community dynamics and the process of software development well enough to say with absolute certainty that a given set of metrics is objectively the correct measure, and as a result we cannot provide a meaningful definition of merit.

'Ken Thompson has an automobile which he helped design. Unlike most automobiles, it has neither speedometer, nor gas gauge, nor any of the other numerous idiot lights which plague the modern driver. Rather, if the driver makes a mistake, a giant “?” lights up in the center of the dashboard. “The experienced driver,” says Thompson, “will usually know what’s wrong.”'

Gods of BSD

Meanwhile, the GPL has legally enforced a consortia on major commercial companies. Red Hat, Novell, IBM, and many others are all contributing, and feel safe in doing so because the others are legally required to do the same. It's basically created a "safe" zone of cooperation, without anyone having to sign complicated legal documents. A company can't feel safe contributing code to the BSDs, because its competitors might simply copy it without reciprocating. There's much more corporate cooperation in the GPL'ed kernel code than with the BSD'd kernel code. Which means that in practice, it's actually been the GPL that's most "business-friendly". So while the BSDs have lost energy every time a company gets involved, the GPL'ed programs gain almost every time a company gets involved. And that explains it all.

No time for shoes; we sped here in our winged car.

ego death arms race

Dennis told me he was going to a class reunion at Harvard.

Me: "I guess you're the most famous member of your class."

dmr: "No, the Unabomber is.

give me a persian rug where the center looks like galaga

When "history" overtakes some new chunk of the recent past, it always comes as a relief--one thing that history does...is to fumigate experience, making it safe and sterile.... Experience undergoes eternal gentrification; the past, all parts of it that are dirty and exciting and dangerous and uncomfortable and real, turns gradually into the East Village.

The "Who wrote the Bourne shell?" question kind of reminds me of the old Bugs Bunny bit where he'd be on some radio gameshow and the host would ask, "Who's buried in Grant's Tomb?" and no one would get it right. (Except totally different because, of course, no one is buried in Grant's Tomb: Grant and his wife are entombed in sarcophagi above ground, not buried below.)

Prometheus: Get going then. Keep to the course you're on.

Ocean: You speak to one already under way. My four-foot bird is skimming with his wings the level paths of air. He will be glad to bend his knee, I think, in his own stall. (Ocean exits as he had entered, flying on his winged beast.)

Not everyone at the labs had a three-letter login. Bjarne Stroustrup had the login bs, despite several gentle suggestions from myself and others that had add a middle initial

Before I was shot, I always thought that I was more half-there than all-there—I always suspected that I was watching TV instead of living life. People sometimes say that the way things happen in movies is unreal, but actually it's the way things happen in life that's unreal. The movies make emotions look so strong and real, whereas when things really do happen to you, it's like watching television—you don't feel anything. Right when I was being shot and ever since, I knew that I was watching television. The channels switch, but it's all television.

г на п (гавно на палке)

Under the siren song of affluence, we began offshoring critical production capacity in the 1960s for geopolitical reasons. In 1971, economist Nicholas Kaldor noted that American financial policies were turning a "a nation of creative producers into a community of rentiers increasingly living on others, seeking gratification in ever more useless consumption, with all the debilitating effects of the bread and circuses of imperial Rome."

McIlroy implies that the problem is that people didn't think hard enough, the old school UNIX mavens would have sat down in the same room and thought longer and harder until they came up with a set of consistent tools that has "unusual simplicity". But that was never going to scale, the philosophy made the mess we're in inevitable. It's not a matter of not thinking longer or harder; it's a matter of having a philosophy that cannot scale unless you have a relatively small team with a shared cultural understanding, able to to sit down in the same room.

p/q2-q4!

When port wine is passed around at British meals, one tradition dictates that a diner passes the decanter to the left immediately after pouring a glass for his or her neighbour on the right; the decanter should not stop its clockwise progress around the table until it is finished. If someone is seen to have failed to follow tradition, the breach is brought to their attention by asking "Do you know the Bishop of Norwich?"; those aware of the tradition treat the question as a reminder, while those who don't are told "He's a terribly good chap, but he always forgets to pass the port."

It is possible to make man suid to a user man. Then, if a cat directory has owner man and mode 0755 (only writable by man), and the cat files have owner man and mode 0644 or 0444 (only writable by man, or not writable at all), no ordinary user can change the cat pages or put other files in the cat directory. If man is not made suid, then a cat directory should have mode 0777 if all users should be able to leave cat pages there. - $ man man

The Collatz Conjecture was once called "a Russian plot to stagnate American mathematics"

rev < /usr/share/dict/web2 | sort | rev > ~/rhyming_dictionary.txt

If anyone even hints at breaking the tradition honoured since FØRTRAN of using i, j, and k for indexing variables, namely replacing them with ii, jj and kk, warn them about what the Spanish Inquisition did to heretics.

I remember a really interesting quote I've heard (forgive me if it's off, it's been a while): "originally a movie looks like it was made by 100 people, then you edit it to look like it was made by 1 person, and then the real magic happens when it looks like it was made by no one". I feel like spacemacs is currently on that 100 ppl choppiness atm, doom is at 1, and no one has figured out how to make it seem natural as it differs per person. I feel like the magic happens when I code my own config, as I know myself best. Idk if that makes sense.

The construction of software should be an engineering discipline. However, this doesn’t preclude individual craftsmanship. Think about the large cathedrals built in Europe during the Middle Ages. Each took thousands of person-years of effort, spread over many decades. Lessons learned were passed down to the next set of builders, who advanced the state of structural engineering with their accomplishments. But the carpenters, stonecutters, carvers, and glass workers were all craftspeople, interpreting the engineering requirements to produce a whole that transcended the purely mechanical side of the construction. It was their belief in their individual contributions that sustained the projects: We who cut mere stones must always be envisioning cathedrals. (Quarry worker’s creed).

Sometimes you want work computers to be sea monsters, not cattle.

Breakable Toys. You work in an environment that does not allow for failure. Yet failure is often the best way to learn anything. Only by attempting to do bold things, failing, learning from that failure, and trying again do we grow into the kind of people who can succeed when faced with difficult problems. Solution: Budget for failure by designing and building toy systems that are similar in toolset, but not in scope to the systems you build at work.

Lopatin’s own interests lay in filmmaking, and later, music journalism, but his parents’ experience with musical and creative censorship while behind the Iron Curtain would help inform his contextual, resourced approach to creating electronic music as OPN later on. “Music was rare and very special to them,” he remembers. “My dad has absolutely crazy stories about trading vodka for the microphone that a trolley driver uses to announce stops and then converting it for band practice, or listening to bootleg records pressed on X-rays. When you don’t have shit, you really learn to actually appreciate it. It’s so fundamental and yet so easy to overlook.”

Clifford Stoll: The first time you do something, it's science. The second time, it's engineering. The third time it's just being a technician. I'm a scientist -- once I do something I want to do something else.

Clifford Stoll: It's out of problems that you can't understand that you make progress. Doing science means bumping into something that makes you wonder, that you don't know the answer to, and in getting to the answer brings you to an understanding of the bigger world around us

The debugging strategy has been "Shake the tree, fix what falls out.: That works really good at first, but now you have to shake the tree really hard to get anything to fall out

Consider also this; all the time you spend studying is time you don't spend helping others, or keeping fit, or being a good friend. You need to decide what the balance of your priorities and time is, but I'd argue someone who spends more time seeking knowledge than being virtuous because seeking knowledge is virtuous is likely missing something very important. As for overspecialisation, people underestimate the importance of intersectionality. You cannot understand physics without a strong grasp of maths. You cannot understand literature without being aware of the historical context in which it was written. Study multiple areas to understand your own better would be my view, as it gives you that all-important tool: context.

Suppose that ah ken aw the pros and cons, know that ah’m gaunnae huv a short life, am ay sound mind etcetera, etcetera, but still want tae use smack? Tey won’t let ye dae it. Tey won’t let ye dae it, because it’s seen as a sign of thir ain failure. Te fact that ye jist simply choose tae reject whit they huv tae ofer. Choose us. Choose life. Choose mortgage payments; choose washing machines; choose cars; choose sitting oan a couch watching mind- numbing and spirit- crushing game shows, stufng fuckin junk food intae yir mooth. Choose rotting away, pishing and shiteing yersel in a home, a total fuckin embarrassment tae the selfsh, fucked- up brats ye’ve produced. Choose life. Well, ah choose not tae choose life. If the cunts cannae handle that, it’s thair fuckin problem. As Harry Lauder sais, ah jist intend tae keep right on to the end of the road.

Years ago, anthropologist Margaret Mead was asked by a student what she considered to be the first sign of civilization in a culture. The student expected Mead to talk about fishhooks or clay pots or grinding stones. But no. Mead said that the first sign of civilization in an ancient culture was a femur (thighbone) that had been broken and then healed. Mead explained that in the animal kingdom, if you break your leg, you die. You cannot run from danger, get to the river for a drink or hunt for food. You are meat for prowling beasts. No animal survives a broken leg long enough for the bone to heal. A broken femur that has healed is evidence that someone has taken time to stay with the one who fell, has bound up the wound, has carried the person to safety and has tended the person through recovery. Helping someone else through difficulty is where civilization starts, Mead said." We are at our best when we serve others. Be civilized.

Does what it says on the tin.

I have the right ideas, but my words are too complicated. I need to simplify them, so that people won't get lost in the dark when they see and hear them. I want them to shine like beacons of light in a world of overly complicated darkness. One day I will find the right words, and they will be simple.

In modern parlance, every single instruction was followed by a GO TO! Put that in Pascal's pipe and smoke it.

I have often felt that programming is an art form, whose real value can only be appreciated by another versed in the same arcane art;

Quiche Eaters

Most present-day inventions, it seems to me, do not differ much from the way humanity has been picturing them in imagination for countless generations. The submarine and aeroplane must have been foreseen by many of our species from the time they first observed the ways of the fish and the bird. All tele-instruments are probably what men always hoped they might be; and the destructive power of our hydrogen bombs is no more terrifying than the Biblical prediction of their impact. But I doubt whether even the most fertile imagination possessed by a mathematician a short century ago could have foreseen the wondrous features of our high-speed electronic computer -

A good programmer was concise and elegant and never wasted a word. They were poets of bits.

Blurbs are hilarious to me. Even modest and prudent writers suddenly become the most fiery and flowery spokespeople when asked to write a blurb. It’s amazing. It’s this whole register of language people would never use otherwise, part self-help and part advertising—you need this book, this book will help you live—written by “writers” who are all trying to outdo themselves. So it seemed like perfect source material for a poem.

We say most aptly that the Analytical Engine weaves algebraical patterns just as the Jacquard-loom weaves flowers and leaves

This is computer science. There aren't restrictions. I can do anything I want. It's just bits. You don't own me.

conceptual integrity

Scheme is an artisan’s little hammer

Dogfood. It is good practice to use the software one is developing on a daily basis, even when one is not actively working on developing or testing the product. This is known as "eating one's dogfood". Many bugs, usually user interface (UI) issues but also occasionally web standards bugs, are found by people using daily builds of the product while not actively looking for failures. A bug found using this technique which prevents the user of the product on a regular basis is called a "dogfood" bug and is usually given a very high priority.

The code base's age makes it likely that its authors by now either have advanced to management positions where reading books as this one is frowned upon or have an eyesight unable to deal with this book's fonts. These changes conveniently provide me with a free license to criticize code without fear of nasty retributions.

A quick way to judge a language implementation is by inspecting its string concatenation function. If concat is implemented as a realloc and memcpy, well, the upstairs lights probably aren’t set to full brightness.

Read broadly (there are a few books recommended in my earlier columns). Always take jobs that expand what you might do (I rarely take a job in the same industry twice). Have your own projects that you're interested in, and they don't need to be as large as a FreeBSD. Code for fun and not always for profit if you can afford it. I started by "playing with computers" as it was called when I was young and I continue to "play" as well as work. Undirected learning has a place.

Multics Emacs proved to be a great success — programming new editing commands was so convenient that even the secretaries in his office started learning how to use it. They used a manual someone had written which showed how to extend Emacs, but didn't say it was a programming. So the secretaries, who believed they couldn't do programming, weren't scared off. They read the manual, discovered they could do useful things and they learned to program. So Bernie saw that an application — a program that does something useful for you — which has Lisp inside it and which you could extend by rewriting the Lisp programs, is actually a very good way for people to learn programming. It gives them a chance to write small programs that are useful for them, which in most arenas you can't possibly do. They can get encouragement for their own practical use — at the stage where it's the hardest — where they don't believe they can program, until they get to the point where they are programmers.

Excellent testing can make you unpopular with almost everyone.

But I was never a debugger-first programmer. None of us in the lab were, and that's probably why the debugging setup in Unix is to this day so weak compared to what other systems provide.

Rob's Rule - what a program presents as output it should also accept as input.

> "... But truth be known, I'm sort of a printf() debugger."

So am I, and ISTR Brian Kernighan and Larry Wall saying that they are, as well.

Who shall be the arbiters of good taste?

Yes, Virginia, it had better be unsigned

An étude (a French word meaning study) is an instrumental musical composition, usually short, of considerable difficulty, and designed to provide practice material for perfecting a particular musical skill.

Kabelsalat

If you want to abstract away the machine from the end user, it's wise to not propagate its internal word size to users trying to build user interfaces for scientific applications!

Finally there came in the mail an invitation from the Institute for Advanced Study: Einstein…von Neumann…Wyl…all these great minds! They write to me, and invite me to be a professor there! And not just a regular professor. Somehow they knew my feelings about the Institute: how it’s too theoretical; how there’s not enough real activity and challenge. So they write, “We appreciate that you have a considerable interest in experiments and in teaching, so we have made arrangements to create a special type of professorship, if you wish: half professor at Princeton University, and half at the Institute.”

Institute for Advanced Study! Special exception! A position better than Einstein, even! It was ideal; it was perfect; it was absurd!

It was absurd. The other offers had made me feel worse, up to a point. They were expecting me to accomplish something. But this offer was so ridiculous, so impossible for me ever to live up to, so ridiculously out of proportion. The other ones were just mistakes; this was an absurdity! I laughed at it while I was shaving, thinking about it.

And then I thought to myself, “You know, what they think of you is so fantastic, it’s impossible to live up to it. You have no responsibility to live up to it!”

It was a brilliant idea: You have no responsibility to live up to what other people think you ought to accomplish. I have no responsibility to be like they expect me to be. It’s their mistake, not my failing.

It wasn’t a failure on my part that the Institute for Advanced Study expected me to be that good; it was impossible. It was clearly a mistake—and the moment I appreciated the possibility that they might be wrong, I realized that it was also true of all the other places, including my own university. I am what I am, and if they expected me to be good and they’re offering me some money for it, it’s their hard luck.

capitalism in molecular life happens in plasma

I like to think of RPN as the computing equivalent of mis en place; before you start cooking, you get all your ingredients lined up.

Parse, don’t validate

Europeans tend to pronounce his name properly, as Nih-klaus Virt, while Americans usually mangle it into something like Nickles Worth. That has led to the programmer joke saying Europeans call him by name while Americans call him by value.

One thing to keep in mind when looking at GNU programs is that they're often intentionally written in an odd style to remove all questions of Unix copyright infringement at the time that they were written. The long-standing advice when writing GNU utilities used to be that if the program you were replacing was optimized for minimizing CPU use, write yours to minimize memory use, or vice-versa. Or in this case, if the program was optimized for simplicity, optimize for throughput.

an ornamental text

quintessentially collegiate in the sense that it involved ordering pizza.

If the Author of the Software (the "Author") needs a place to crash and you have a sofa available, you should maybe give the Author a break and let him sleep on your couch. If you are caught in a dire situation wherein you only have enough time to save one person out of a group, and the Author is a member of that group, you must save the Author.

>>> I wish it was as easy for others to have such satisfaction these days.

>>Amen to that. I think we all lived, you Bell Labs people especially, in a simpler time. We were trying to fit into 64K, split I/D 128K, my Z80 was 64K but some extra for graphics, then the VAX came and we were trying for 1MB, Suns with 4MB.

So small mattered a lot and that meant the Unix philosophy of do one thing and do it well worked quite nicely.

What that also meant, to people coming on a little bit after, was that it was relatively easy to modify stuff, the stuff was not that complex. Even I had an easy time, my prime was back at Sun when SunOS was a uniprocessor OS. That is dramatically simpler than a fully threaded SMP OS that has support for TCP offloading, NUMA, etc, etc. I really don't know how systems people do it these days, it is a much more complex world. So I'm with Norm, it was fun back in the day to be able to come in and have a big impact. I too wish that it was as easy for young people to come in and have that impact. I've done that and it was awesome, these days, I have no idea how I'd make a difference.

>By doing something besides systems. There are tons of open source projects at the user level that make a difference. Consider something like AsciiDoc. Less than 10 years old (methinks), and in use for production by at least one major technical publisher that I know of. (And it sure beats the pants off of DocBook XML.) Or the work I do on gawk, Chet on Bash, other GNU bits. There's a whole world out there besides just the kernel.

Rules, guidelines, and principles are gems of distilled experience that should be studied and respected. But they’re never a substute for thinking critically about your work.

The other way, which is actually a really meaningful thing to me, and it’s super super subtle, is that I included a fast-forward button, so you can fast forward the music, you can skip to the next track, et cetera. But the subtle part is that, when you press the fast-forward button, you have changed the playback speed of the piece of music, but the random-number generator is moving ahead at the same rate. And so, the result of that is that you’ve now offset the music a little bit from the random sequence that it would have gotten had you not pressed that button. And, so you are suddenly getting an entirely new version of the piece. It sounds pretty much identical, because I use randomness in this textural level in the sound synthesis. It’s not like it has any control at the actual musical level. But the philosophical side of it is that if you never press the fast-forward button, then it’s this closed computational system, and it’s basically output-only. You know, you’re turning on the chip, the code starts running and it’s this closed system that mirrors some basic number theory. And the moment you press the button, then you’ve kind of interjected in this pure closed system, and you’ve brought in all of the messiness of the real world — like whether or not our time and space is grid-based on the lowest level, or whether or not we have free will, or where randomness comes in in quantum mechanics. All of these messy real world things suddenly come into the system and it’s no longer this clean abstract thing. And so, for me, what that also means is that the work of mathematicians, like Alan Turing and Kurt Gödel, these people who really explored the limits of computation, and the limits of mathematics, really — their work in applicable until you press the fast-forward button. It’s applicable so long as this is a deterministic system, and you kind of kill that determinism when you press the fast-forward button. So I think of that as, like, playing the piece.

And like the wooden boat, Morse code gives us a link with the past. The old salts of radio are out there, still pounding the brass, and willing to chat with anyone who makes a credible effort to enter their world.

I travel with a small radio that fits into an old laptop computer bag. I'll throw a wire out the hotel window, slip on my headphones, dim the room lights, and see what's coming in. Sometimes I'll hear weak signals with exotic distortions picked up in the ionosphere as the waves crossed the equator or bounced over the pole. I feel lightning bolts jump from my fingers when I squeeze the key to send my own thoughts back the other way.

Sometimes I'll hear the crisp clean signal of an A-1 operator, probably a retired operator, probably living in Phoenix and having trouble sleeping. Once for them code unlocked adventure. They traveled the world keeping in touch with dits and dahs. They make it sound like poetry. I like to meet these guys by radio. In code they don't seem so old.

KMS UXA DRM OMG WTF BBQ

Incidentally, adjusting the Q on a random EQ band with no cut or boost is a surefire way of convincing most clients that something sounds better...

What's interesting is that at the same time that we were doing AWK, there was a project at Xerox PARC called ... Poplar ... which had fairly similar goals. That is, they were going to take these files consisting of a lot of characters and you break them apart in various ways and you process them using some language and you pass them on. And they put a lot of work into clever ideas. It was a functional programming language. Which was ... especially then a big deal, now it's less of a big deal. And it was supposed to be user friendly ... They had all sorts of stuff. And AWK lived and Poplar died. And I don't know, you know, this has affected my view about people who talk about user interfaces fairly severely. I'm no longer convinced anybody knows anything about user interface. It's clear some things are easier to use and some things are harder to use. That's okay. But it's also clear that people, people learn a lot. ... although AWK s language is both C-like and not real elegant, a lot of AWK programming isn't done by getting the reference manual and writing code. What is done is by finding some AWK program that's very similar to what you want to do and changing it, okay? And then the fact that it's a little weird isn't so bad. It's, you know what you're just changing. AWK lives partly, I think, because of its programming by example.

computerology

I think I was here two years before I understood anything Ken said. Because if you ask Ken a question, he sort of gives you a one line answer to the question he thinks you ought to have asked approximately. I don't know what he thinks he's doing, but that's certainly what it seemed to be like he was doing. And you have to know a lot to understand a one line answer to these things. And after a while the answers become extremely informative. But it really took a long time before I just understood anything Ken was saying.

I’d love to see Jack Tramiel and Richard Stallman in a debate. God, that would be just great.

I think almost every experienced programmer has gone through three stages and some go through four:

Cowboy coders or nuggets know little to nothing about design and view it as an unnecessary formality. If working on small projects for non-technical stakeholders, this attitude may serve them well for a while; it Gets Things Done, it impresses the boss, makes the programmer feel good about himself and confirms the idea that he knows what he's doing (even though he doesn't).

Architecture Astronauts have witnessed the failures of their first ball-of-yarn projects to adapt to changing circumstances. Everything must be rewritten and to prevent the need for another rewrite in the future, they create inner platforms, and end up spending 4 hours a day on support because nobody else understands how to use them properly.

Quasi-engineers often mistake themselves for actual, trained engineers because they are genuinely competent and understand some engineering principles. They're aware of the underlying engineering and business concepts: Risk, ROI, UX, performance, maintainability, and so on. These people see design and documentation as a continuum and are usually able to adapt the level of architecture/design to the project requirements.

At this point, many fall in love with methodologies, whether they be Agile, Waterfall, RUP, etc. They start believing in the absolute infallibility and even necessity of these methodologies without realizing that in the actual software engineering field, they're merely tools, not religions. And unfortunately, it prevents them from ever getting to the final stage, which is:

Duct tape programmers AKA gurus or highly-paid consultants know what architecture and design they're going to use within five minutes after hearing the project requirements. All of the architecture and design work is still happening, but it's on an intuitive level and happening so fast that an untrained observer would mistake it for cowboy coding - and many do.

Generally these people are all about creating a product that's "good enough" and so their works may be a little under-engineered but they are miles away from the spaghetti code produced by cowboy coders. Nuggets cannot even identify these people when they're told about them, because to them, everything that is happening in the background just doesn't exist.

Some of you will probably be thinking to yourselves at this point that I haven't answered the question. That's because the question itself is flawed. Cowboy coding isn't a choice, it's a skill level, and you can't choose to be a cowboy coder any more than you can choose to be illiterate.

If you are a cowboy coder, then you know no other way.

If you've become an architecture astronaut, you are physically and psychologically incapable of producing software with no design.

If you are a quasi-engineer (or a professional engineer), then completing a project with little or no up-front design effort is a conscious choice (usually due to absurd deadlines) that has to be weighed against the obvious risks, and undertaken only after the stakeholders have agreed to them (usually in writing).

And if you are a duct-tape programmer, then there is never any reason to "cowboy code" because you can build a quality product just as quickly.

One thing you have to be careful about, though, is that duct tape programmers are the software world equivalent of pretty boys… those breathtakingly good-looking young men who can roll out of bed, without shaving, without combing their hair, and without brushing their teeth, and get on the subway in yesterday’s dirty clothes and look beautiful, because that’s who they are. You, my friend, cannot go out in public without combing your hair. It will frighten the children. Because you’re just not that pretty.

Hackers don't write documentation, or plan out their programming, instead they write Manifestos.

"Hypergrunge" was more specific, because a lot of the peripheral ideas about this record are like very directly dealing with observations in the music industry, and grunge is like, an insane thing, because it's a fallacy, it's totally fabricated. All grunge is hypergrunge. It's been synthesized by all of these marketing factors. You know about grunge speak? Or the story of grunge speak? There was a secretary at the Sub Pop office in the '90s, she was always getting interviewed because journalists would just come in and be like "So, what's grunge?" And she got sick of it, and she started tricking them or lying to them, like "Yeah, there's a whole language to grunge, dude." And she made up all these words that were like, grunge speak. [The New York Times] went and published this thing that she tricked them with, and I was so inspired by that.

How does one patch KDE2 under FreeBSD?

pete burkeet

this is a brilliant exercise. we need to talk.

trips around the sun

These compromises mean that we can best think about typeface design as the creation of a wonderful collection of letters but not as a collection of wonderful letters.

the medium is the massage

Piratical Practices

Ever since I heard this story, I can't help but see that we live in a world brimming with "electric" meat. Learn to find it. Then get out there and grab it.

I have not reached the burrito point yet

These digits that Samson had jammed into the computer were a universal language that could produce anything-—a Bach fugue or an antiaircraft system.

Yes, formerly they wanted your blood, now they want your ink.

der Herr Warum

Ever since the 50's, the fashion in language design has been minimalism. Languages were designed around paradigms, and paradigms were judged on elegance. If your choice of paradigm was lists, the result was LISP. If you decided on strings, you invented SNOBOL. Arrays, you wound up with APL. If your reasoning led you to logic, you ended up with Prolog. If you thought that descriptions of algorithms must be the basis of programming, then your fate was Algol. If assembly language stayed at the core of your world view, what you created would be an imperative lanugage and might look like any of C, BASIC or FORTRAN. If stacks were your thing, you created a language that looked like Forth. And who knows if the belief in objects as the one paradigm will ever again see Orodruin and feel the heat of the flames in which it was forged. Some of these languages were totalitarian -- they insisted everything be shoehorned into their central concept. I recall some very unpleasant struggles with early dialects of SNOBOL, LISP and Prolog. Imperative languages tended to be more elastic. But, while the strictness of adherence varies, almost every language invented over the past 50 years bears the clear stamp of minimalism.

LALR parsing rode on the backs of yacc and the Portable C Compiler into total dominance over parsing mindshare. LALR came to define production-quality parsing. In 1978, there had been two C compilers, both at AT&T's Bell Labs -- Ritchie's original, and Johnson's Portable C Compiler, which was about to replace it. Neither was a commercial product -- AT&T was a regulated monopoly, banned from selling hardware or software. By 2006, C was the most important systems programming language in a world which had become far more dependent on computer systems. There were now many C compilers, several of them of great commercial importance. Arguably, one of these was the most visible production-quality C compiler. This no longer came from AT&T. The leader among C compilers in 2006 was GCC, the GNU foundation's flagship accomplishment. GCC can be said to have clearly taken this lead by 1994, when BSD UNIX had switched from the Portable C Compiler to GCC. This was a revolution of another kind, but as far as the parsing algorithm went, it was "Hail to the new boss... same as the old boss". GCC parsing was firmly in the LALR tradition.

But on 28 February 2006, the nearly 3 decades of LALR dominance were over. That's the date of the change log for GCC 4.1. It's a long document, and the relevant entry is deep inside it. It's one of the shorter change descriptions. In fact, it's exactly this one sentence: The old Bison-based C and Objective-C parser has been replaced by a new, faster hand-written recursive descent parser. For me, that's a little like reading page 62,518 of the Federal Register and spotting a one-line notice: Effective immediately, Manhattan belongs to the Indians.

The history is not being told in order. This is not because I am attempting to ape "Pulp Fiction". It's because the series was originally planned as perhaps two or three blog posts and has grown in scope. I'm stuck now with telling the tale using flashbacks and fast-forwards, but I'll make the process as easy as possible.

Historians can divided into two types -- the Braudel's and the Churchill's. Fernand Braudel insisted on accumulation of detail, and avoided broad sweeping statements, especially those of the kind that are hard to back up with facts. Winston Churchill thought the important thing was the broad sense, and that historians should awaken the reader to what really mattered, not deaden him with forgettable detail.

But it is also true that some of the programming skills we've developed over the years could aptly be called symptoms.

Colorless green ideas sleep furiously

If you want to build a ship, don't drum up the men to gather wood, divide the work and give orders. Instead, teach them to yearn for the vast and endless sea.

If languages were free, this is the kind of perfection that we would seek -- languages precisely fitted to their domain, so that adding to them cannot make them better.

Ada's "Notes" were written 20 years after Mary Shelly, while visiting Ada's father in Switzerland, wrote the novel Frankenstein. For Ada's contemporaries, announcing that you planned to create a machine that composed music, or did advanced mathematical reasoning, was not very different from announcing that you planned to assemble a human being in your lab.

2006-10-31: The default prefix used to be "sqlite_". But then Mcafee started using SQLite in their anti-virus product and it started putting files with the "sqlite" name in the c:/temp folder. This annoyed many windows users. Those users would then do a Google search for "sqlite", find the telephone numbers of the developers and call to wake them up at night and complain. For this reason, the default name prefix is changed to be "sqlite" spelled backwards. So the temp files are still identified, but anybody smart enough to figure out the code is also likely smart enough to know that calling the developer will not help get rid of the file.

Newsgroups: comp.os.linux.advocacy,gnu.misc.discuss

John Dyson said:

> not most) users. I attain no high in passive smoking, and only feel

> the anxiety. The very sad thing is to avoid the anxiety, pot users

> often either seek more pot, alcohol or benzo's for temporary relief.

> This is a really silly vicious circle for a little short term

> relief. Those who think that pot is harmless are diluding themselves

> or even simply ignorant.

That's because your not using the Scheme Configurable Window Manager.

I found that having the proper WM while toking is extremely important.

You need one that absolutely minimizes use of the rodent, and with

scwm's synthetic events, awesome key binding supports and scripting, I

have a setup which allows me to do everything without pushing about

the cursed rolly thing. Thank you Maciej for thinking about all of us

stoners when designing SCWM.

It's like herding cats

Bikeshed

The alternatives are much worse. With informal and non-standardized error messaging you would have to know not only the programming language itself but its error messaging Pidgin as well. Every C++ practitioner can confirm that reading STL messages is an art by itself. It takes knowledge, patience, determination and, occasionally, a crystal ball to build a firm understanding of a reported problem.

one must understand that Unix commands are not a logical language. They are a natural language--in the sense that they developed by organic evolution, not "intelligent design".

Less traditional symbols are also useful. For example: ☎->✆(); // take my phone (☎) off hook (✆)

As many of you know, the rule with Unix was "you can touch anything, but if you change it, you own it." This was a great way to turn arguments into progress.

…I realised that I wanted to read about them what I myself knew. More than this—what only I knew. Deprived of this possibility, I decided to write about them. Hence this book.

URL shorteners may be one of the worst ideas, one of the most backward ideas, to come out of the last five years. In very recent times, per-site shorteners, where a website registers a smaller version of its hostname and provides a single small link for a more complicated piece of content within it… those are fine. But these general-purpose URL shorteners, with their shady or fragile setups and utter dependence upon them, well. If we lose TinyURL or bit.ly, millions of weblogs, essays, and non-archived tweets lose their meaning. Instantly. To someone in the future, it’ll be like everyone from a certain era of history, say ten years of the 18th century, started speaking in a one-time pad of cryptographic pass phrases.

But the biggest burden falls on the clicker, the person who follows the links. The extra layer of indirection slows down browsing with additional DNS lookups and server hits. A new and potentially unreliable middleman now sits between the link and its destination. And the long-term archivability of the hyperlink now depends on the health of a third party. The shortener may decide a link is a Terms Of Service violation and delete it. If the shortener accidentally erases a database, forgets to renew its domain, or just disappears, the link will break. If a top-level domain changes its policy on commercial use, the link will break. If the shortener gets hacked, every link becomes a potential phishing attack.

I think you’ll see what I mean if I teach you a few principles magicians employ when they want to alter your perceptions…Make the secret a lot more trouble than the trick seems worth. You will be fooled by a trick if it involves more time, money and practice than you (or any other sane onlooker) would be willing to invest.”

it was the right thing to do. wish i had thought of it. i was too busy saving bytes.

you could have invented ...

Richard Stallman, famous for Emacs, founder of GNU Project and Free Software Foundation presents a wonderful beard. Although Eric Raymond did the Art of Unix programming, he has only really been famous for Fetchmail, hence his smaller mustache

Shannon says this can't happen.

“My students, once they are filled up with new ecological knowledge and have developed an awareness of our situation, always say, ‘We have to tell people what’s happening in the world. If they only knew what they were doing, they would stop.’ But, it’s not true. We are all saturated with data. We do know what we are doing...The data may change our minds, but we need poetry to change our hearts”

Multicores r us

The Net interprets censorship as damage and routes around it

Syntactic sugar causes cancer of the semicolon.

If you know the way broadly, you will see it in everything

You're Holding It Wrong

The increasing population in the US, and the demands of Congress to ask more questions in each census, was making the processing of the data a longer and longer process. It was anticipated that the 1890 census data would not be processed before the 1900 census was due unless something was done to improve the processing methodology. Herman Hollerith won the competition for the delivery of data processing equipment to assist in the processing of the data from the 1890 US Census, and went on to assist in the census processing for many countries around the world. The company he founded, Hollerith Tabulating Company, eventually became one of the three that composed the Calculating-Tabulating-Recording (C-T-R) company in 1914, and eventually was renamed IBM in 1924.

Unlike music, scientific work does not come with liner notes. Perhaps it should.

Still, I recall a brief exchange with a physicist who was sitting next to me listening to a panel discussion on Theoretical Computer Science and Physics (which took place in the early 1990s): They [the TCS guys] talk as if a bit is as fundamental as an electron - he told me with amazement. That's of course wrong, a bit is far more fundamental - I answered to his even greater amazement. Needless to say, he did not talk to me during the rest of the panel...

An electron is merely a specific model of a specific phenomena. It is a very important model, but how can you compare its importance to the importance of the notion of a model? But, then, all models are built of binary attributes.

This reminds me of a drive from MIT to Providence that Shafi and me made in the mid-1980's. We stopped in a diner on the way, got some food and coffee, and then Shafi asked the attendant Do you have the notion of a refill? The answer was: Yes, we do have refills, but what is a notion?

Indeed, people may use notions without having a notion of a notion, and likewise they may think of bits without a clear conceptualizing of the pure notion of a bit. In both cases, these notions exist before we conceptualize them; these notions are preconditions to any conceptualization.

He who understands Archimedes and Apollonius will admire less the achievements of the foremost men of later times.

Alright, so here we are, in front of the, er, elephants. And the cool thing about these guys is that they have really, really, really long trunks. And that’s cool.

Long ago, I observed that Edsger Dijkstra’s dining philosopher’s problem received much more attention than I thought it deserved. For example, it isn’t nearly as important as the self-stabilization problem, also introduced by Dijkstra, which received almost no notice for a decade. I realized that it was the story that went with it that made the problem so popular. I felt that the Byzantine generals problem (which was discovered by my colleagues at SRI International; was really important, and I wanted it to be noticed. So, I formulated it in terms of Byzantine generals, taking my inspiration from a related problem that I had heard described years earlier by Jim Gray as the Chinese generals problem.

On April 12, Kevin MacKenzie emails the MsgGroup a suggestion of adding some emotion back into the dry text medium of email, such as -) for indicating a sentence was tongue-in-cheek. Though flamed by many at the time, emoticons became widely used after Scott Fahlman suggested the use of :-) and :-( in a CMU BBS on 19 September 1982.

In Turkish there is a simpler solution to this you just say, “kolay gelsin Steve”

enjoy the music and this life we have:)

I am regularly asked what the average Internet user can do to ensure his security. My first answer is usually 'Nothing; you're screwed'

(On Paul Vixie) Yeah, that unibrow is epic! Maybe that is why he is so good at networking, Even his brow is connected! hahahah.

boiling the power users out

Common Lisp macros are to C++ templates what poetry is to IRS tax forms

Hence the description of Windows 95 as "a 32-bit extension to a 16-bit patch to an 8 bit OS originally for a 4-bit chip written by a 2-bit company that doesn't care 1 bit about its users."

It began badly. We were walking along the South Downs Way in early summer, the sun glittering on the English Channel on our right, the Weald of Sussex stretching away to our left. “How big,” asked Arthur, “should a text editor be?"

Everyone knows how C programs look: tall and skinny. Whitney’s don’t.

Imagine if there were a tunnel which ran into your basement from the outside world, ending in a sturdy door with four or five high-security locks which anybody could approach completely anonymously. A mail slot in the door allows you to receive messages and news delivered through the tunnel, but isn't big enough to allow intruders to enter. Now imagine that every time you go down into your basement, you found several hundred letters piled up in a snowdrift extending from the mail slot, and that to find the rare messages from your friends and family you had to sort through reams of pornography of the most disgusting kind, solicitations for criminal schemes, “human engineered” attempts to steal your identity and financial information, and the occasional rat, scorpion, or snake slipped through the slot to attack you if you're insufficiently wary. You don't allow your kids into the basement any more for fear of what they may see coming through the slot, and you're worried by the stories of people like yourself who've had their basements filled with sewage or concrete spewed through the mail slot by malicious “pranksters”. Further, whenever you're in the basement you not only hear the incessant sound of unwanted letters and worse dropping through the mail slot, but every minute or so you hear somebody trying a key or pick in one of your locks. As a savvy basement tunnel owner, you make a point of regularly reading tunnel security news to learn of “exploits” which compromise the locks you're using so you can update your locks before miscreants can break in through the tunnel. You may consider it wise to install motion detectors in your basement so you're notified if an intruder does manage to defeat your locks and gain entry. As the risks of basement tunnels make the news more and more often, industry and government begin to draw up plans to “do something” about them. A new “trusted door” scheme is proposed, which will replace the existing locks and mail slot with “inherently secure” versions which you're not allowed to open up and examine, whose master keys are guarded by commercial manufacturers and government agencies entirely deserving of your trust. You may choose to be patient, put up with the inconveniences and risks of your basement tunnel until you can install that trusted door. Or, you may simply decide that what comes through the tunnel isn't remotely worth the aggravation it creates and dynamite the whole thing, reclaiming your basement for yourself.

If there’s no such thing as anonymous data, does privacy just mean security?

non-fungible

verb 99 noun 62